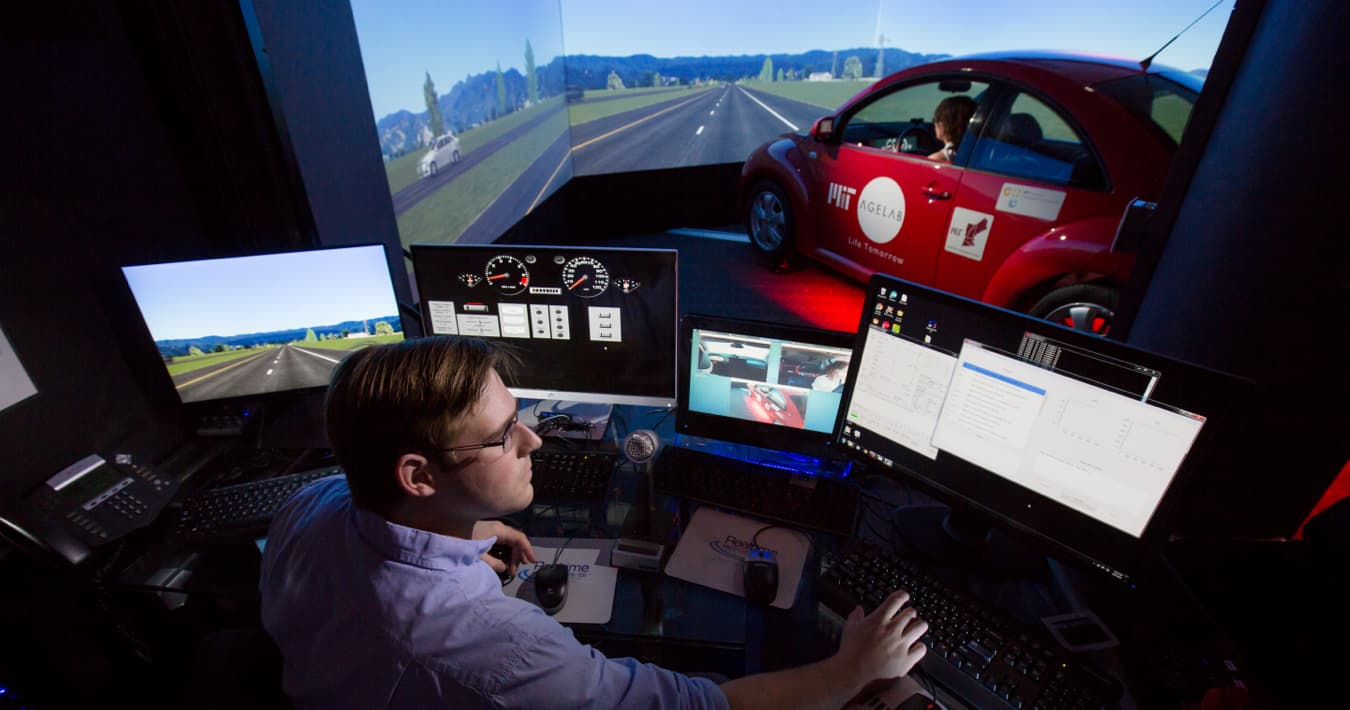

MIT DriveSeg Dataset for Dynamic Driving Scene Segmentation

To date, self-driving data made available to the research community have primarily consisted of troves of static, single images that can be used to identify and track common objects found in and around the road, such as bicycles, pedestrians or traffic lights through the use of “bounding boxes.”

By contrast, DriveSeg contains more precise, pixel-level representations of many of these same common road objects, but through the lens of a continuous video driving scene. This type of full scene segmentation can be particularly helpful for identifying more amorphous objects – such as road construction and vegetation – that do not always have such defined and uniform shapes. The dataset is comprised of two parts:

DriveSeg (Manual)

A forward facing frame-by-frame pixel level semantic labeled dataset captured from a moving vehicle during continuous daylight driving through a crowded city street.

The dataset can be downloaded from the IEEE DataPort or demoed as a video.

Technical Summary:

Video data - 2 minutes 47 seconds (5,000 frame) 1080P (1920x1080) 30 fps

Class definitions (12) - vehicle, pedestrian, road, sidewalk, bicycle, motorcycle, building, terrain (horizontal vegetation), vegetation (vertical vegetation), pole, traffic light, and traffic sign

DriveSeg (Semi-auto)

A set of forward facing frame-by-frame pixel level semantic labeled dataset (coarsely annotated through a novel semiautomatic annotation approach developed by MIT) captured from moving vehicles driving in a range of real world scenarios drawn from MIT Advanced Vehicle Technology (AVT) Consortium data.

The dataset can be downloaded from the IEEE DataPort.

Technical Summary:

Video data - Sixty seven 10 second 720P (1280x720) 30 fps videos (20,100 frames)

Class definitions (12) - vehicle, pedestrian, road, sidewalk, bicycle, motorcycle, building, terrain (horizontal vegetation), vegetation (vertical vegetation), pole, traffic light, and traffic sign

Technical Documentation and Related Research

Ding, L., Terwilliger, J., Sherony, R., Reimer, B. & Fridman, L. (2020). MIT DriveSeg (Manual) Dataset for Dynamic Driving Scene Segmentation. Massachusetts Institute of Technology AgeLab Technical Report 2020-1, Cambridge, MA. (pdf)

Ding, L., Terwilliger, J., Sherony, R., Reimer, B. & Fridman, L. (2020). MIT DriveSeg (Manual) Dataset. IEEE Dataport. DOI: 10.21227/mmke-dv03.

Ding, L., Glazer, M., Terwilliger, J., Reimer, B. & Fridman, L. (2020). MIT DriveSeg (Semi-auto) Dataset: Large-scale Semi-automated Annotation of Semantic Driving Scenes. Massachusetts Institute of Technology AgeLab Technical Report 2020-2, Cambridge, MA. (pdf)

Ding, L., Glazer, M., Terwilliger, J., Reimer, B. & Fridman, L. (2020). MIT DriveSeg (Semi-auto) Dataset. IEEE Dataport. DOI: 10.21227/nb3n-kk46.

Ding, L., Terwilliger, J., Sherony, R., Reimer. B. & Fridman, L. (2019). Value of Temporal Dynamics Information in Driving Scene Segmentation. arXiv preprint arXiv:1904.00758. (link)

Attribution and Contact Information

This work was done in collaboration with the Toyota Collaborative Safety Research Center (CSRC). For more information, click here.

For any questions related to this dataset or requests to remove Identifying information please contact driveseg@mit.edu.